AI and Ethics: Navigating the Moral Implications of Machine Learning is a topic that has gained increasing attention in recent years. As artificial intelligence and machine learning technologies continue to advance, it has become crucial to consider the ethical implications of their use. From privacy concerns to potential biases in algorithmic decision-making, there are numerous moral considerations that must be carefully navigated in the development and deployment of AI systems. As such, the intersection of AI and ethics has become a key area of focus for researchers, policymakers, and industry professionals alike.

The intersection of AI and ethics has sparked significant interest and curiosity among individuals from various fields. Many are intrigued by the potential societal impacts of AI and machine learning technologies, and how these advancements will shape our future. Others are concerned about the potential misuse of AI and the implications for individual rights and freedoms. Additionally, the ethical considerations surrounding the use of AI in healthcare, autonomous vehicles, and other domains have become particularly thought-provoking for many. As a result, the exploration of the moral implications of machine learning and artificial intelligence continues to be a compelling and relevant subject of inquiry.

Understanding AI and Ethics

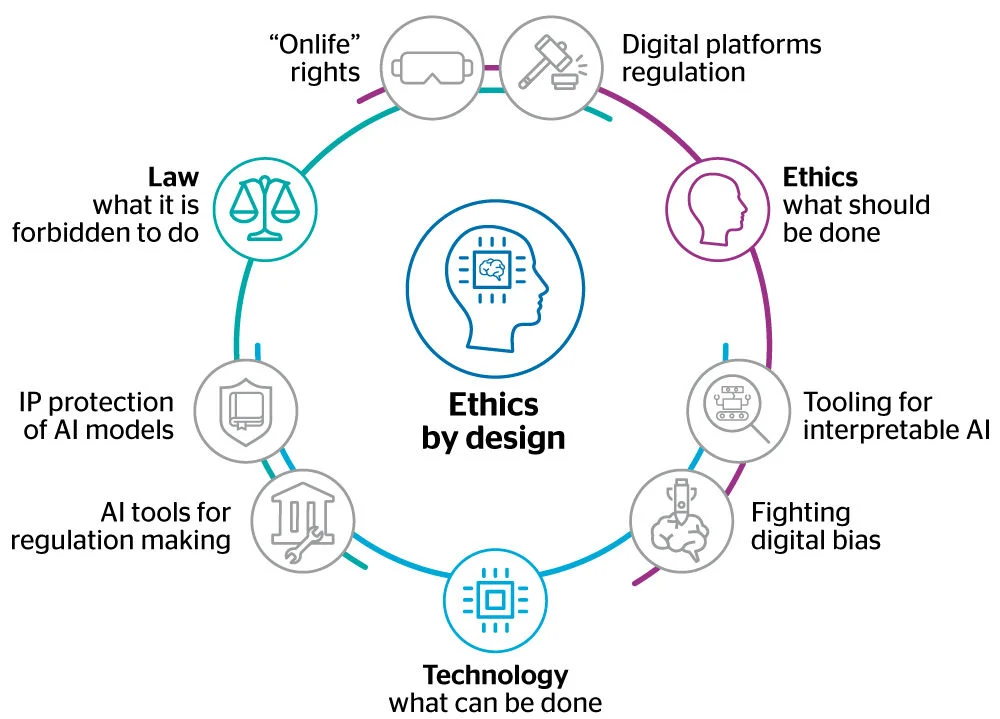

Artificial Intelligence (AI) has the potential to greatly benefit society, but it also raises important ethical concerns. As AI technologies become more advanced and integrated into various aspects of our lives, it is crucial to consider the moral implications of these systems. Ethical considerations in AI encompass issues such as privacy, transparency, accountability, and fairness. These concerns are particularly pertinent in machine learning, where algorithms are designed to learn from data and make decisions without explicit human intervention.

Furthermore, as AI systems become increasingly autonomous, questions arise about their ability to make ethical decisions and the potential consequences of their actions. It is important for developers, policymakers, and society as a whole to engage in discussions about the ethical use of AI and to establish guidelines that promote the responsible and beneficial deployment of these technologies.

The Impact of AI on Society

AI technologies have the potential to significantly impact various aspects of society, including employment, healthcare, education, and governance. While these advancements offer numerous benefits, they also raise ethical concerns regarding job displacement, algorithmic bias, and the equitable distribution of AI-related benefits. For example, the use of AI in hiring processes and predictive policing has sparked debates about fairness and discrimination. Additionally, the potential for AI to exacerbate existing societal inequalities is a significant ethical consideration.

It is essential to critically examine the potential consequences of AI on society and to ensure that these technologies are developed and deployed in a manner that promotes ethical principles such as fairness, accountability, and transparency. Proactive measures, such as ethical impact assessments and inclusive stakeholder engagement, can help mitigate the negative impacts of AI on society and foster its responsible and beneficial integration.

Ethical Considerations in Machine Learning

Machine learning, a subset of AI, presents unique ethical considerations due to its reliance on algorithms that learn from data. One of the primary ethical concerns in machine learning is algorithmic bias, where the training data used to develop AI systems may reflect and perpetuate societal biases. This can result in discriminatory outcomes, particularly in sensitive domains such as lending, law enforcement, and healthcare. Additionally, the lack of transparency in some machine learning algorithms raises concerns about accountability and the potential for unintended consequences.

Ethical considerations in machine learning also encompass issues of privacy and consent, as the collection and analysis of large datasets raise questions about the ethical use of personal information. Furthermore, the potential for machine learning systems to make autonomous decisions without human intervention highlights the need for ethical guidelines to govern their behavior. Addressing these ethical considerations is essential to ensure that machine learning technologies are developed and utilized in a manner that upholds fundamental ethical principles.

Regulatory and Policy Implications

The ethical considerations surrounding AI and machine learning have prompted discussions about the need for regulatory frameworks and policies to govern their development and deployment. Policymakers are faced with the challenge of balancing innovation and societal benefits with the ethical concerns associated with these technologies. Regulatory approaches may include establishing standards for ethical AI, implementing transparency requirements for algorithmic decision-making, and creating mechanisms for accountability in the event of AI-related harm.

Furthermore, international collaboration and coordination are essential to address the global nature of AI and machine learning technologies. Shared ethical principles and regulatory standards can help ensure consistency and promote responsible innovation across borders. It is imperative for regulatory and policy frameworks to adapt to the rapidly evolving landscape of AI and machine learning, while upholding ethical considerations and safeguarding the well-being of individuals and society as a whole.

Responsible Development and Deployment of AI

Responsible development and deployment of AI entail integrating ethical considerations into every stage of the technology lifecycle, from design and development to implementation and monitoring. This approach involves promoting transparency in AI systems, ensuring the fairness and accountability of algorithmic decision-making, and prioritizing the well-being of individuals and communities impacted by AI technologies. Responsible AI also involves actively engaging with diverse stakeholders, including ethicists, policymakers, and the general public, to foster a comprehensive understanding of the ethical implications of AI.

Moreover, responsible AI development necessitates ongoing assessment and mitigation of potential risks, such as algorithmic bias and privacy breaches. By prioritizing ethical considerations and the societal impact of AI, developers and organizations can contribute to the creation of AI systems that align with moral and ethical principles, ultimately fostering trust and acceptance of these technologies within society.

Impact on Privacy and Data Security

The increasing use of AI and machine learning raises significant concerns about privacy and data security. AI systems often rely on vast amounts of data, including personal information, to function effectively. This reliance on data collection and analysis raises ethical questions about consent, data ownership, and the potential for unauthorized use or breaches of sensitive information. Furthermore, the use of AI in surveillance and monitoring applications has sparked debates about the erosion of privacy rights and individual autonomy.

Addressing the ethical implications of AI on privacy and data security requires robust data protection regulations, transparent data governance practices, and proactive measures to safeguard against potential abuses of personal information. Moreover, promoting a culture of ethical data use and establishing clear guidelines for the responsible handling of sensitive data are essential to mitigate the privacy and security risks associated with AI and machine learning.

Ethical Decision-making in Autonomous Systems

The increasing autonomy of AI systems raises complex ethical considerations regarding their ability to make decisions that align with ethical principles. Autonomous AI systems, such as self-driving cars and automated medical diagnosis tools, are tasked with making decisions that can have profound impacts on human safety and well-being. Ensuring that these systems prioritize ethical decision-making, such as prioritizing human safety and well-being, is a critical ethical consideration.

Ethical decision-making in autonomous systems requires the integration of ethical frameworks and principles into the design and operation of AI technologies. Additionally, establishing mechanisms for ongoing oversight and human intervention in critical decision-making processes can help mitigate the ethical risks associated with autonomous AI systems. Engaging in interdisciplinary dialogue involving experts in ethics, technology, and policy can contribute to the development of guidelines and standards that promote ethical decision-making in autonomous systems.

Mitigating Bias and Discrimination in AI

Addressing bias and discrimination in AI is a crucial ethical consideration, particularly in machine learning systems that can perpetuate and amplify societal biases. The presence of biased datasets and the lack of diversity in AI development teams can contribute to algorithmic bias, leading to discriminatory outcomes in areas such as hiring, lending, and law enforcement. Mitigating bias and discrimination in AI requires proactive measures to identify and address biases in training data, as well as promoting diversity and inclusion in AI development and governance.

Furthermore, transparency in algorithmic decision-making and the establishment of mechanisms for accountability and recourse in cases of discriminatory AI outcomes are essential to address these ethical concerns. By actively working to mitigate bias and discrimination in AI, developers and organizations can contribute to the creation of more equitable and ethical AI systems that align with fundamental principles of fairness and justice.

| AI and Ethics | Navigating the Moral Implications |

|---|---|

| Artificial Intelligence (AI) | Understanding the moral implications of AI and how it affects society. |

| Ethical Considerations | Exploring the ethical dilemmas and responsibilities that come with the development and use of AI. |

| Machine Learning | Examining the impact of machine learning algorithms on decision-making and human behavior. |

AI and Ethics: Navigating the Moral Implications of Machine Learning addresses the complex intersection of artificial intelligence, ethical considerations, and machine learning. It delves into the moral implications of AI on society, explores the ethical dilemmas and responsibilities that come with AI development and use, and examines the impact of machine learning algorithms on decision-making and human behavior.